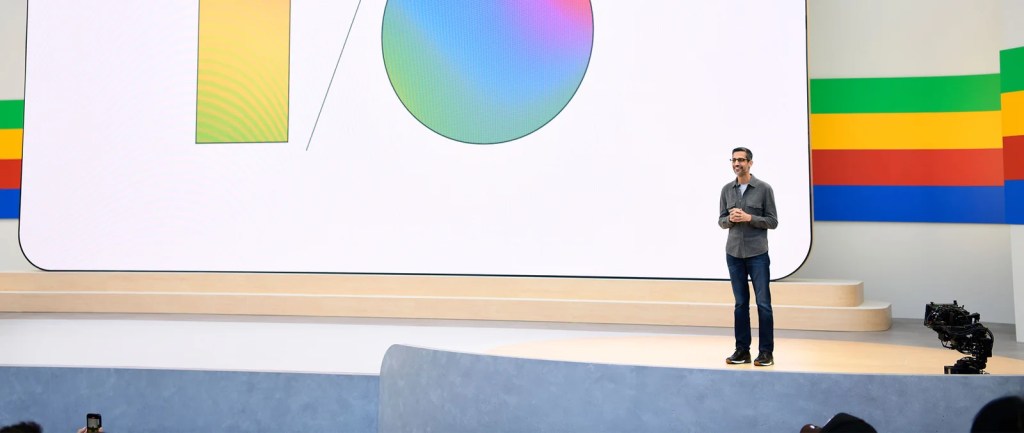

Google’s annual I/O conference was held in California this week. We’re in San Francisco to break down the biggest announcements.

One phrase was consistently repeated throughout the two-hour annual Google I/O event held in Mountain View. Alphabet and Google CEO Sundar Pichai, and the heads of Search, Android, the Gemini app, Workspace, and Deep Mind all reiterated that we are now in “the Gemini era.”

Google’s top brass weaved the phrase into each presentation highlighting how Google’s AI – Gemini – would impact Google’s products. The takeaway from the I/O event is that AI will now be further embedded into our lives, whether we know about it, and like it, or not.

“Google is fully in our Gemini era,” says Pichai. “Gemini is more than a chatbot; it’s designed to be your personal, helpful assistant that can help you tackle complex tasks and take actions on your behalf.”

There are undoubtedly productivity gains to be had in this ‘Gemini era.’ Rather than opening the Chrome app to search a term, Android users will soon be able to circle a part of an image – either stored on their phone or viewed through the Android camera – to learn more about it.

Project Astra

DeepMind also announced a new project, known as ‘Astra’ – a multimodal AI assistant that can ‘understand the context you’re in, and respond naturally in conversation.’

Powered by Gemini, Project Astra features ‘Live’ capability.

“Interacting with Gemini should feel conversational and intuitive. So we’re announcing a new Gemini experience that brings us closer to that vision called Live that allows you to have an in-depth conversation with Gemini using your voice,” says Pichai.

A demo provided by Google (above) shows a user scanning an office through the camera lens and then asking Gemini if it knows where their glasses are. Gemini correctly identified the location and told the user where they could find the glasses.

“Looking further ahead we’ve always wanted to build a universal agent that will be useful in everyday life,” says Pichai. “Project Astra shows multimodal understanding and real-time conversational capabilities.”

From 1 to 2 million tokens in 3 months

The advancements that have occurred since Gemini was revealed at I/O 2023, are in part due to a significant increase in the number of tokens available in a context window.

‘A token is ‘equivalent to about 4 characters for Gemini models. 100 tokens are about 60-80 English words,’ according to a Google app development page.

Just three months ago, Gemini announced a groundbreaking 1-million token context window. It has now doubled that number.

“We’ll be bringing 2-million tokens to Gemini Advanced later this year, making it possible to upload and analyze super dense files like video and long code,” says Pichai.

Integrating Gemini into Gmail, Meets

Gemini AI is integrating into Google Workspaces too. It can look through Gmail and summarise the emails received on a specific topic.

Unread emails relating to a child’s school activities was the example given at I/O. Instead of a parent having to find and read each missed email, Gemini can provide a summary and a dated list of the things that need to be actioned. For example, the parent received a summary noting the deadline for a school camp consent form to be submitted, a reminder about a special school day coming up, and a summary from a missed parent/teacher Google Meets session.

Similar capability is also being rolled out in Photos. Rather than scrolling through thousands of images to find one particular shot, Gemini can do the legwork for you.

“With Ask Photos, you can request what you’re looking for in a natural way, like: ‘Show me the best photo from each national park I’ve visited.’ Google Photos can show you what you’re looking for, saving you from all that scrolling,” a Google statement reads.

Pichai gave a preview of what Google is now developing that will be available to users in the future.

“It’s pretty fun to shop for shoes, and a lot less fun to return them when they don’t fit,” says Pichai.

“Imagine if Gemini could do all the steps for you: Searching your inbox for the receipt … Locating the order number from your email… Filling out a return form… Even scheduling a UPS pickup.”

DeepMind: Google’s brain trust

Demis Habbis, the CEO of Google DeepMind, took the stage to showcase ‘Veo’, a new generative AI product that can create video from text, image and video prompts.

DeepMind has also been working on integrating Gemini into tools to create music and film. Wyclef Jean demonstrated Veo’s capability to fuse Haitian and Brazilian beats to create a new sound. Actor Donald Glover gathered with friends at his farm in Ojai, California, giving Veo prompts that created a movie.

Kory Mathewson, a Google DeepMind research scientist was there to help Glover bring his vision to fruition using Veo.

“We are able to bring ideas to life that were otherwise not possible. The core technology is Google DeepMind’s generative video model that has been trained to convert input text into output video,” says Mathewson.

“Everybody is going to become a director and everybody should become a director because at the heart of this is just storytelling,” says Glover.

Developers on Gemini

The increased capability of Gemini is impacting developers outside the Alphabet realm, too.

“This progress is only possible because of our incredible developer community. You are making it real, through the experiences and applications you build every day,” says Pichai.

Look back on the week that was with hand-picked articles from Australia and around the world. Sign up to the Forbes Australia newsletter here or become a member here.