Synchron founder Tom Oxley explains to Forbes Australia how his partnership with NVIDIA and Apple is training AI direct from the brain to create a “flight simulator” for humans.

Synchron unveiled its “Chiral” AI and its roadmap to using brain-computer interfaces to train AI direct from human neural activity at chip company NVIDIA’s GPU Technology Conference in San Jose, California, Wednesday.

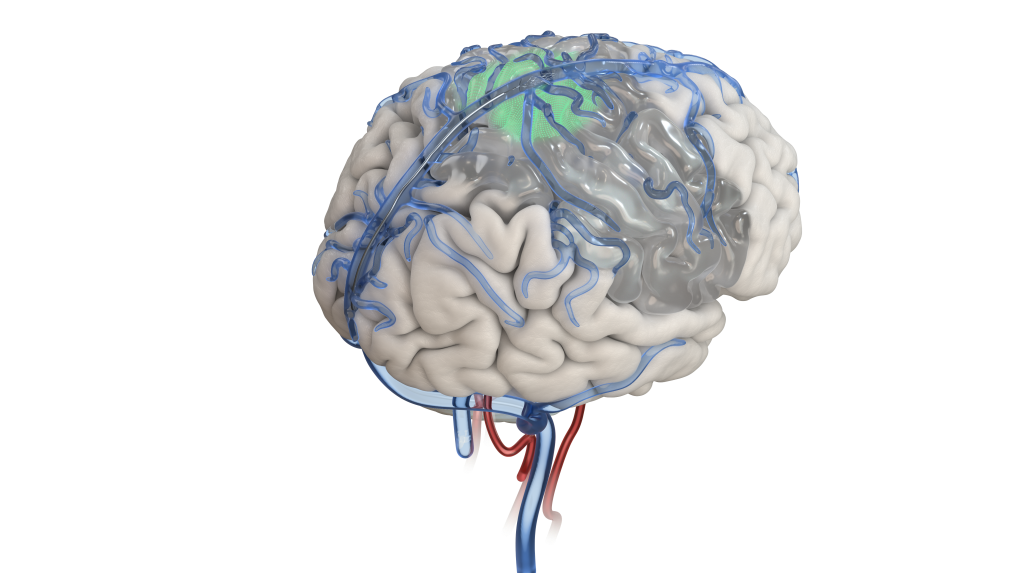

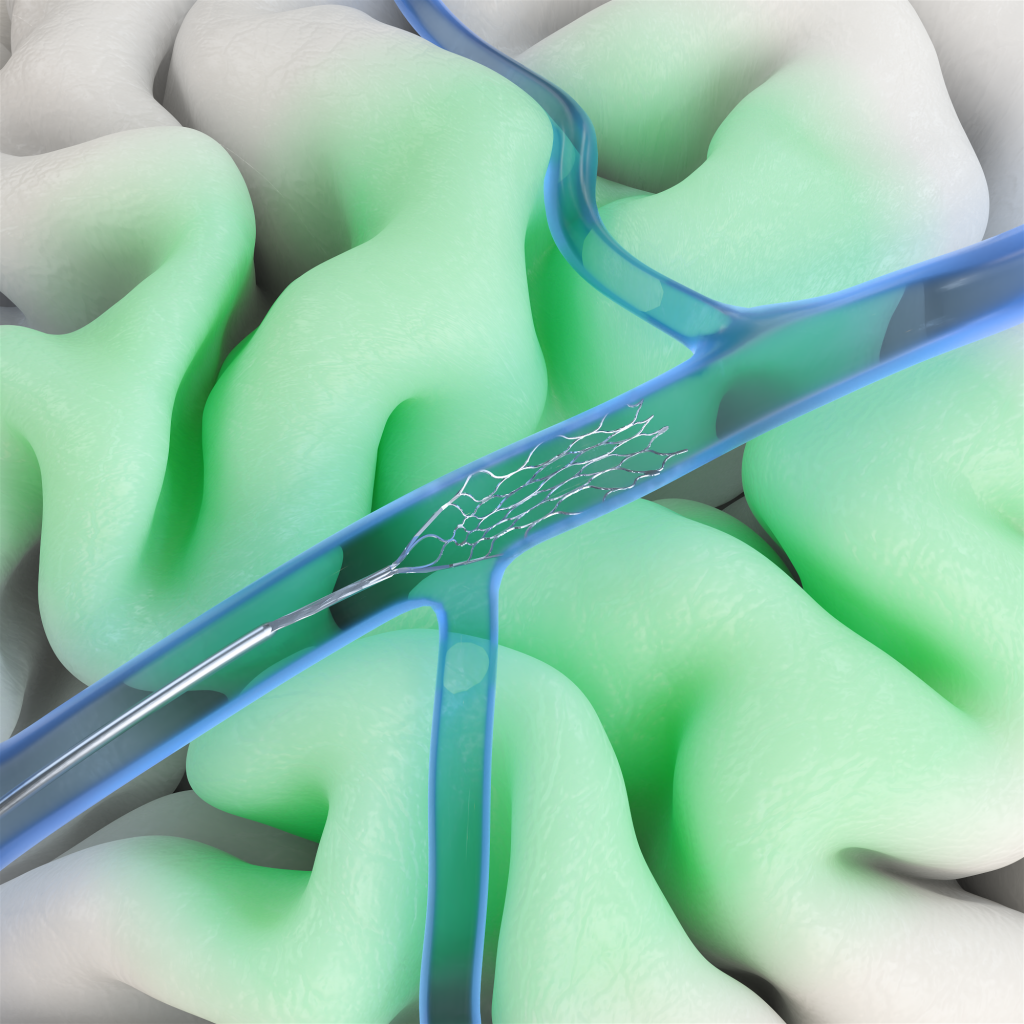

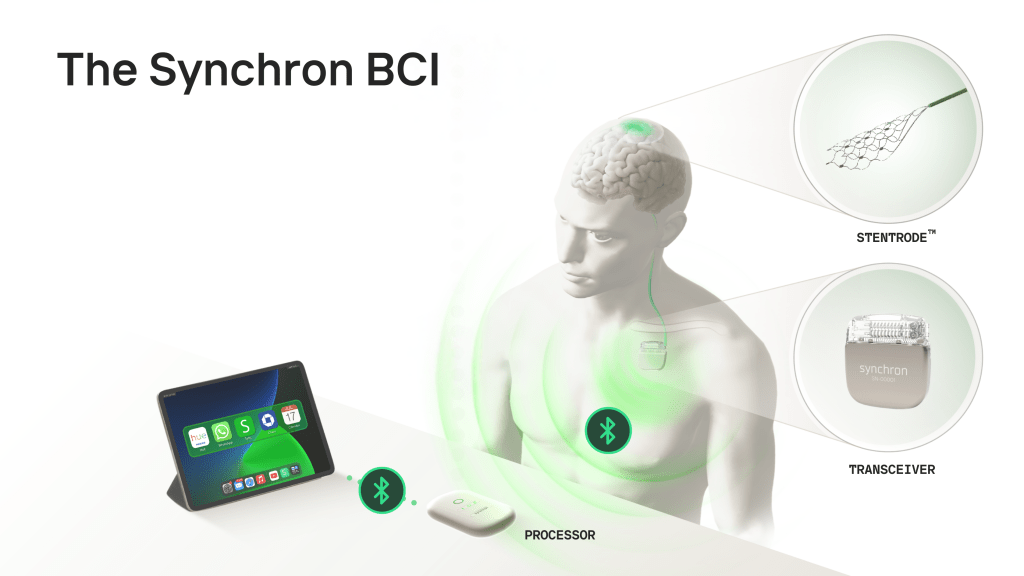

The Australian-founded company released footage of Melbourne man Rodney Gorham, 65, going about his day. Gorham has had a Synchron “Stentrode” in his brain since 2020. He has ALS, and can’t walk, talk, or use his hands.

Yet he puts on a playlist, turns on a fan, feeds his cavoodle, and starts a robot vacuum cleaner, using “direct thought-control” – that is, just his thoughts and his eyes. He received a rousing ovation.

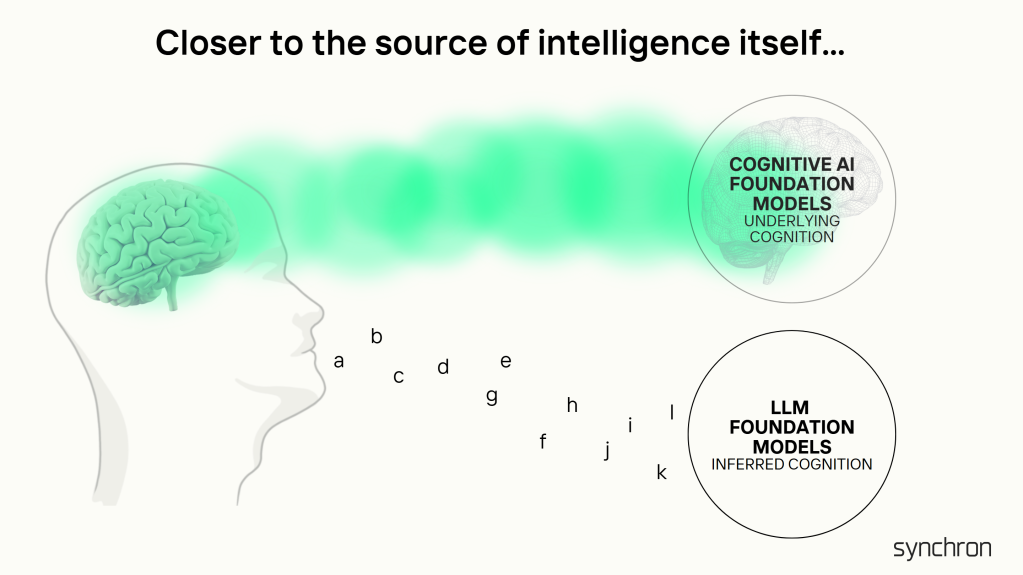

But Synchron is going much deeper than that – training its AI, “Chiral”, behind such functions, with what it describes as a “foundation model of human cognitions, marking the emergence of Cognitive AI—artificial intelligence trained directly on human neural activity”.

Synchron says it is advancing brain computer interfaces from supervised learning to self-supervised learning, “accelerating the transition by combining large-scale neural data with advanced NVIDIA AI-powered computing”.

Forbes Australia asked Synchron co-founder and CEO Tom Oxley to explain what that meant, as if to his grandmother.

The Grandma explanation

“All of the brain’s activities are represented in electrical pulses. The more of these pulses that you can detect in context, the better. In other words, what did my brain do when X happened to me? What was my brain doing when my partner said that thing to me? What was my brain doing when I was hungry and that thing happened? The more information we can gather, the more we can learn about how the brain acted in a certain environment. From an AI perspective, you then turn that into a token. You can then use that token to engage with other layers of AI.

“We recreate your body in a simulated body. You look down, you’re looking at your body, you’re looking at your hands. You can’t control them anymore, you’re paralysed. But… that activates your brain in a really amazing way.”

Tom Oxley, Synchron founder and CEO

“So if the body fails and the brain is reacting to an environment, this technology can know what your brain would do in a certain environment, and you can then use that to engage and convert intention directly into action. So this ‘cognitive AI’ concept is intention-to-action. The device detects what you want it to do, and it sends a signal to an action layer of AI that lets you make a choice.

“So in Rodney’s case, he’s looking around the room, he sees his dog, he sees the fan, he sees the light and he decides that he wants to do something. He wants to turn them on. He wants to activate that system.

“The device knows that he wants to do that, connects to the digital world through AI and then acts upon his desire and his will. So it removes the need for the body to engage with the world. I’m calling it AI-enabled BCI. All the BCI is doing on its own is looking at a snapshot in time of what your brain wanted to do.

“NVIDIA’s HoloScan takes the information from the environment and turns it into data. When you look over and you see your robot in the house, the HoloScan knows that you’re looking at it and it connects to the robot and it gives you options.”

A GPT-like moment

Oxley explained that the next stage of their AI modelling will be to take NVIDIA’s Cosmos to create a human “flight simulator”. “Cosmos was an invention by NVIDIA to create simulated environments. So they call it digital twins.

“Let’s say you’ve got a robot in your kitchen, washing dishes. You can create a simulated kitchen and put a bunch of glasses in there for the robot to try and wash. And if the robot makes a mistake and drops a glass, it does that in a simulated environment, and then you can multiply it by a million, it’ll learn how to handle certain situations without having to do it in real life smashing a million real glasses. So by the time you deploy it in the real kitchen, it’s already done a whole bunch of training.

“It’s like a flight simulator. They built it to train systems into various industries, but we want to use it to put our own users in a simulated environment to learn new skills in their BCI.

“What we would do is, if your body’s failed, we recreate your body in a simulated body. You look down, you’re looking at your body, you’re looking at your hands. You can’t control them anymore, you’re paralyzed. But if you’re looking at your hands, that activates your brain in a really amazing way. So then we can train the BCI to think it’s looking at a real body and learn a whole bunch of new skills.”

Synchron announced it’s own IA system, Chiral – pronounced kiral. Oxley says it’s being trained in the same way as large language models, but in such a way that it is extracting data direct from the brain.

“The way that GPT abstracts knowledge from all of the words on the internet to appear to be very wise and knowledgeable, it does that by applying huge amounts of compute, of training, autoregressive training on massive datasets. If you apply that same principle to brain data, you could potentially pre-train a massive dataset on brain data and extract that same knowledge and wisdom, but it doesn’t come out in words. It comes out in the language of the brain.

“If this works, it’s going to be a GPT-like moment with the brain where it actually extracts out what the brain is trying to do in moments of time. And so that would be an abstraction of cognition. So it would be models of cognition.

“Then we can use that in this ‘intention-to-action’. This would be a very powerful way to help people implement interactions between their brain and layers of AI that just are really hard to even imagine right now.”

Because it’s only going to be possible with huge data set, Oxley says the prize for this type of technology will go to whichever company gets to scale first in the race for workable brain-computer interfaces. Synchron’s main competitor, Neuralink, has devices implanted in three patients for a year.

“We now have 10 users over five years and thousands of hours of data. And we’ll soon have tens of thousands and hundreds of thousands, and millions of hours.

“Over the next three to five years, we’re preparing for our scale up of data and to use it in ways that try to apply these generative training techniques on brain data at scale. That’s what we’re gonna be calling ‘cognitive AI’. In a nutshell, what I’ve realized is that the destination of BCI is going to be cognitive AI.”

Oxley says Synchron is ramping up its manufacturing to prepare for a clinical trial with 30 to 50 participants. “We’ve been doing a lot behind the scenes to get ready for that. Over the course of the next few years, we’re going to conduct a pivotal trial [the device equivalent of a Phase-3 trial] and hopefully move first to market towards commercial launch.”

Oxley said he cried when he saw the Rodney Gorham video. “We’ve been working with Rodney for a long time and he’s obviously gone through a lot, and what he’s achieved is just phenomenal. It’s just very inspiring to be working with people going through so much and continuing to give energy and break new boundaries. It’s inspiring and just keeps us pushing along, knowing that we’re onto something here.”

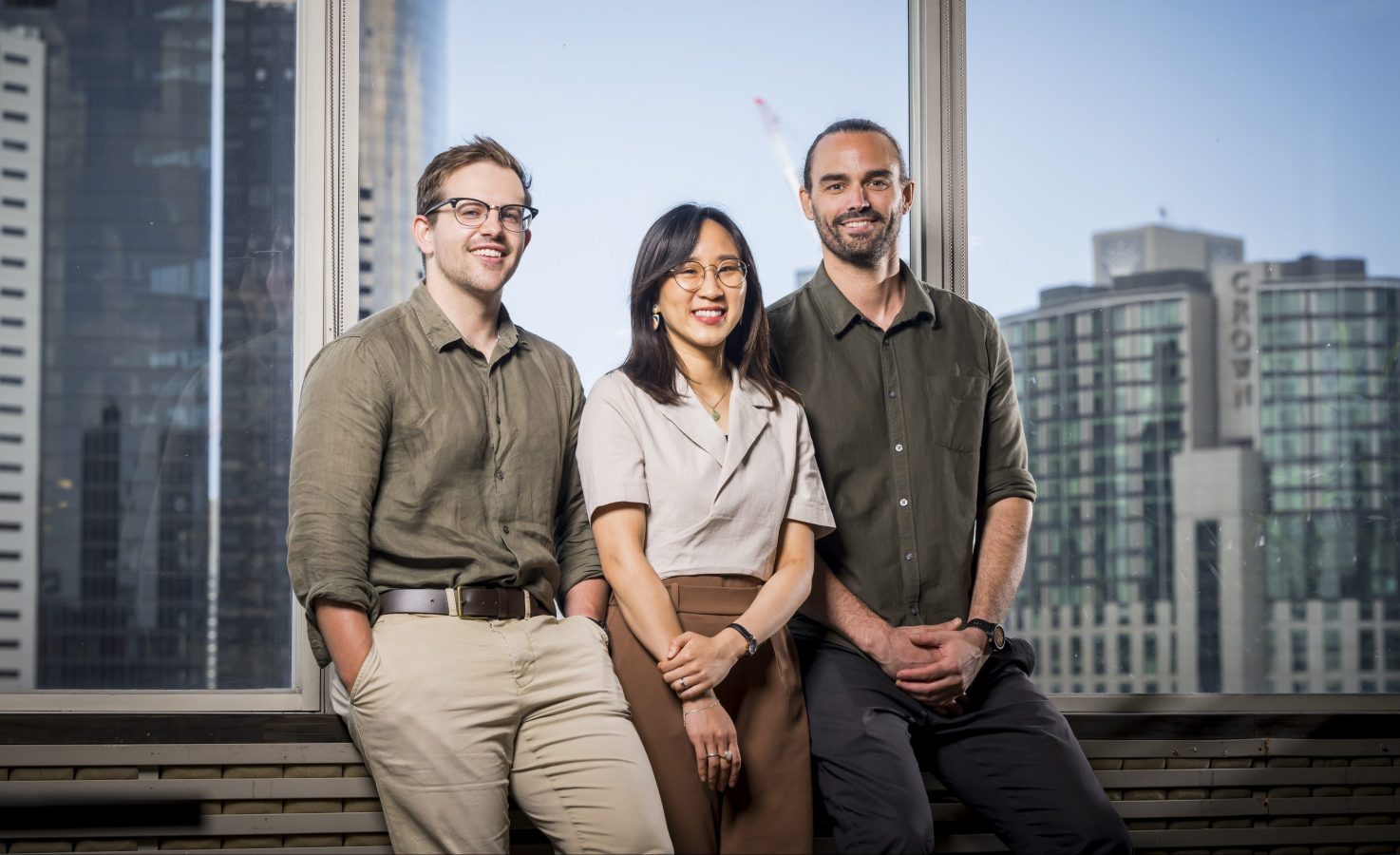

Synchron was founded by Oxley and biomedical engineer Nick Opie in Melbourne in 2012. It put its first brain-computer interface in a human in Melbourne in August, 2019, getting the “stentrode” into the brain via the jugular vein.

Neuralink inserted its first brain-computer interface device in a human in January, 2024 and has since done two more. Neuralink has developed a surgical robot which it says can rapidly insert flexible probes into the brain.

Neuralink has raised more than US$685 million. Synchron which counts DARPA, Khosla Ventures, ARCH Venture, Bezos Expeditions and the Gates Foundation among its backers, has raised a total of $US145 million at a valuation of about US$1 billion.

Look back on the week that was with hand-picked articles from Australia and around the world. Sign up to the Forbes Australia newsletter here or become a member here.