Meta confirmed that images and captions posted by Australian users are being used to train Meta AI. European users of Meta platforms can opt-out, in accordance with data regulations placed on tech companies. No such regulation exists in Australia – so nothing is preventing Meta AI from procuring your public posts.

Six years ago, the European Union instituted strict rules on tech companies under legislation known as General Data Protection Regulation, or GDPR. Since then, the EU has brought in two more regulatory acts focused on protecting the data of individuals. The Data Act – focused on the rights of users – was enacted in January of this year. The Data Governance Act became active in September 2023.

“Though it was drafted and passed by the European Union (EU), it imposes obligations onto organizations anywhere, so long as they target or collect data related to people in the EU. The regulation was put into effect on May 25, 2018. The GDPR will levy harsh fines against those who violate its privacy and security standard,” an EU statement reads.

As a consequence, Meta AI, and other tech companies, have to notify European users that they can opt-out of new features that are being offered.

“We are honouring all European objections. If an objection form is submitted before Llama training begins, then that person’s data won’t be used to train those models, either in the current training round or in the future,” Meta states in a post titled ‘Building AI Technology for Europeans in a Transparent and Responsible Way.’

“We want to be transparent with people so they are aware of their rights and the controls available to them. That’s why, since 22 May, we’ve sent more than two billion in-app notifications and emails to people in Europe to explain what we’re doing. These notifications contain a link to an objection form that gives people the opportunity to object to their data being used in our AI modelling efforts.”

That same level of transparency and ability to object is not offered to Meta’s Australian users. The company has stated there is no opportunity to opt-out of Meta AI, which launched in Australia on April 19, the same day it was announced.

What if my account is set to private, not public?

Posts set to ‘private’ and the content of Messenger messages will not be fed into Meta’s AI, the company says. Llama 3, Meta’s most advanced AI to date, did not train on content from private posts either, according to Meta.

“Publicly shared posts from Instagram and Facebook – including photos and text – were part of the data used to train the generative AI models underlying the features we announced at Connect. We didn’t train these models using people’s private posts. We also do not use the content of your private messages with friends and family to train our AIs,” a Meta statement reads.

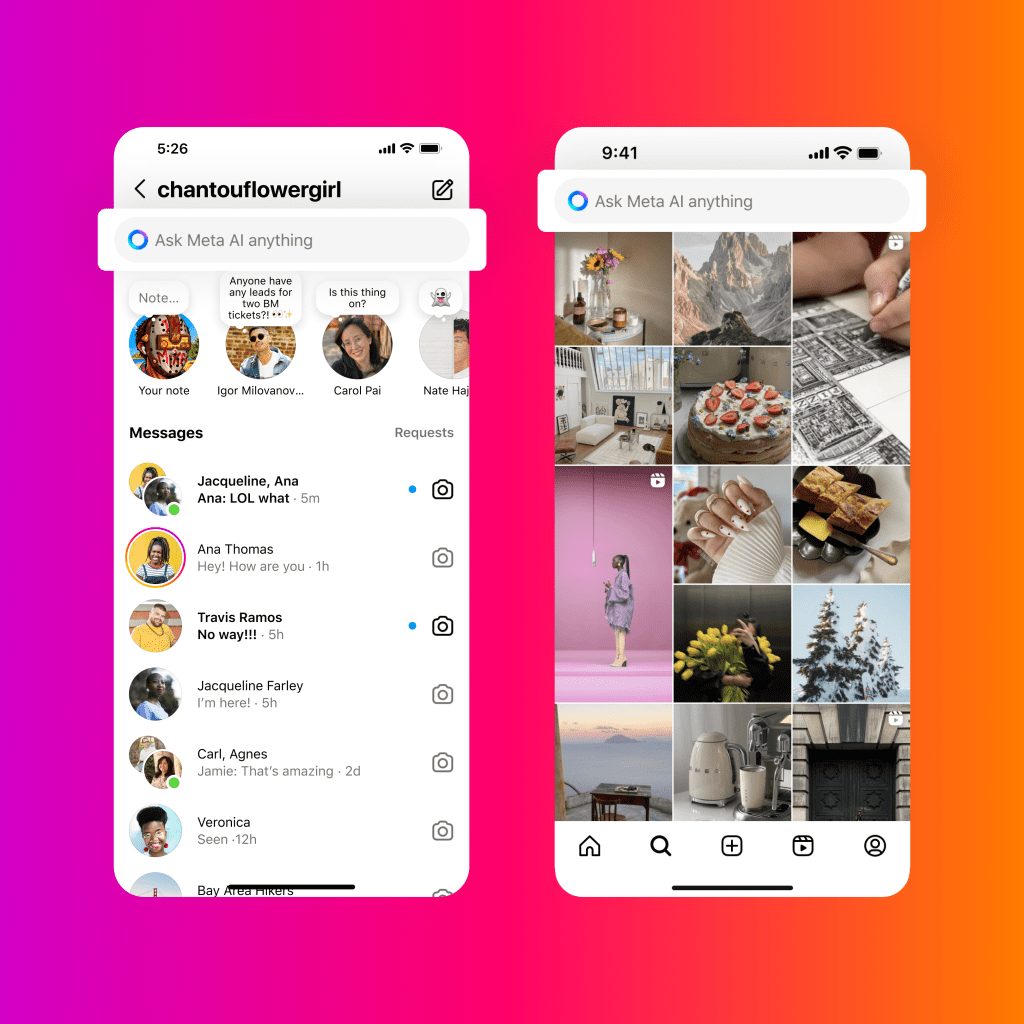

Searching Meta’s platforms is now done with Meta AI

In addition to images and captions in public posts on Facebook and Instagram, Meta AI is also learning from the search functions on those platforms, and on Whatsapp, another Meta platform. Terms entered into the ‘Ask Meta AI anything’ search bar are fed into, and train, Meta AI. There is no way to opt out, making search a less private experience than it has been previously.

Meta provides instructions on its website to delete the content of a chat with AI. This only applies to chats that have taken place on Meta AI platforms however, and not to images posted to Facebook or Instagram.

“Sometimes, an AI might get off-topic or maybe you just want to delete the information that it has about you,” the Meta instructions read.

“There’s a command that you can type into any individual chat that you have with an AI on Messenger, Instagram or WhatsApp that will reset all of the AIs on that app, including the ones that might be in group chat that you’re in. It does this by deleting the AIs copies of your messages and the previous context of the conversation, which are any saved details about you that you’ve shared in that chat. You’ll still see you copies of these chats, but the AIs won’t remember the previous messages.”

The command is /reset-all-ais.

Australia’s eSafety Commission updated its explanation of the search function on Meta platforms this week, to help Australians understand that their chats are not completely private.

“If you want to search a particular word, phrase or user in your past WhatsApp conversations, this is done through Meta AI. In the same search bar, you can enter prompts to ask questions, generate images or start a conversation with Meta AI,” the eSafety statement reads.

“Meta AI cannot currently be turned off once it appears in Meta’s apps, although chats with Meta AI can be muted. It is difficult to opt out of sharing your data with Meta AI and your posts and interactions on apps that incorporate Meta AI may be used to train its AI models.”

Australia partners with the EU to expand safety provisions

The eSafety Commission released a statement this week regarding working closer with the EU on safety provisions. The partnership with the European Commission division that enforces the Digital Services Act (DSA) aims to expand transparency and accountability measures of online platform services.

Algorithms and artificial intelligence – including recommender systems, generative AI, and emerging technologies – is listed as a common area of interest of the European Commission and Australia’s eSafety Commission.

Australian AI researchers also have concerns about the lack of robust regulations in this space.

Rebecca Johnson is a doctoral researcher at the University of Sydney and a managing editor of the ‘The AI Ethics Journal.’ Johnson says regulation in Australia is not keeping up with the rapid pace of change.

“There are many people working on this but Australia is far behind the USA, the UK, and many other developed nations. The government has put out frameworks, discussion papers, and reports, but we are a far cry from the regulation and standardisation that we need to deal with these technologies,” Johnson tells Forbes Australia.

“I would say the Office of E-Safety has been the most effective in dealing with some of the risks, but there are numerous other considerations that currently fall through the cracks in our existing legal system.”

Look back on the week that was with hand-picked articles from Australia and around the world. Sign up to the Forbes Australia newsletter here or become a member here.